Human-AI collaboration system

The project is a collection of studies focusing on how AI is currently being used (as of 2023), exploring its many perspectives — from ethical models and discussions to its practical applications in both military and non-military fields. The outcome of this investigation is a framework designed to enhance human-AI collaboration, with a particular focus on maximizing the synergy between designers and artificial intelligence within creative and problem-solving processes.

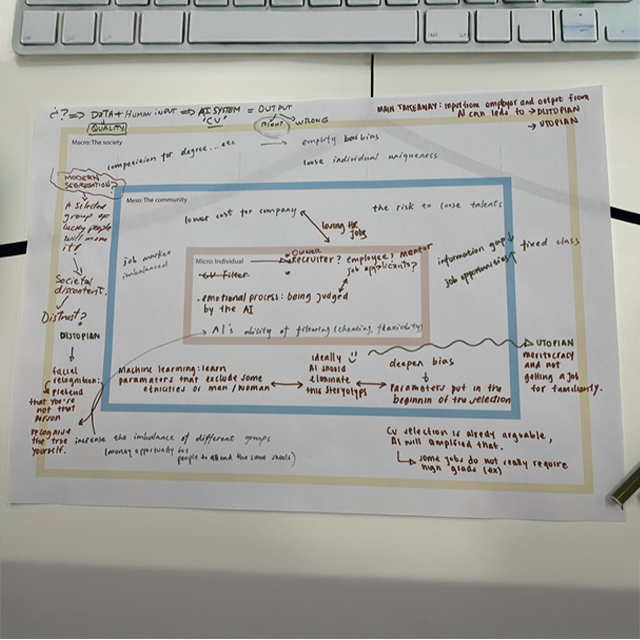

Mapping the current state of AI in the workplace showed that AI is a tool interconnected with its context, not an isolated entity.

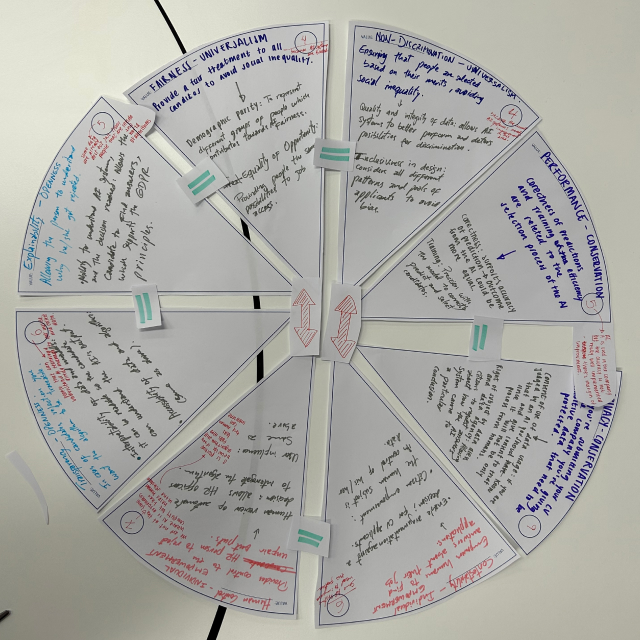

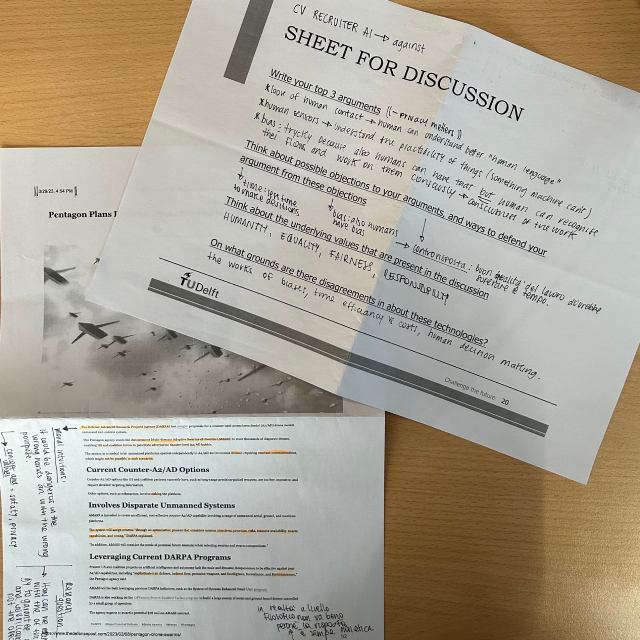

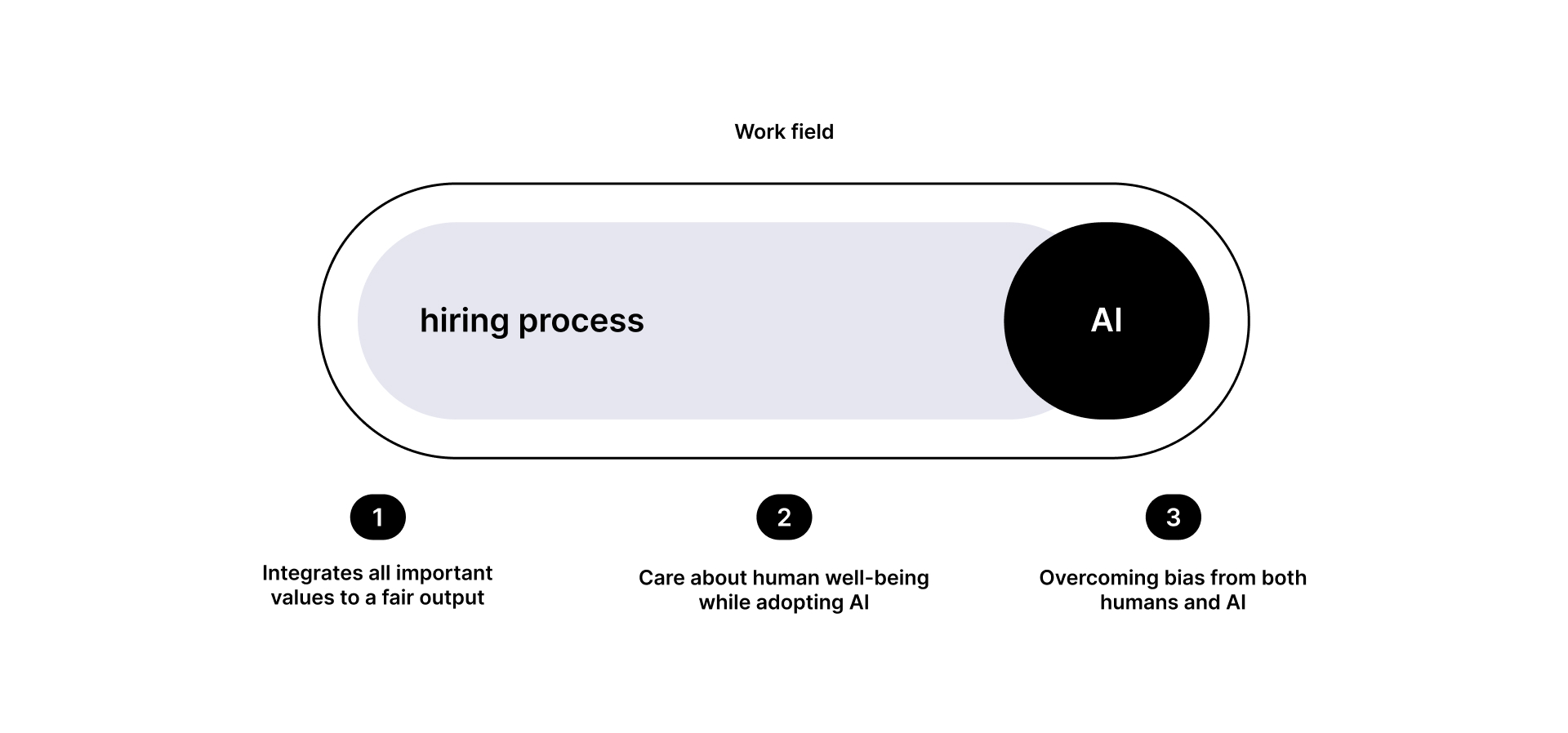

Analyzing Human Control and Non-discrimination in AI recruitment revealed tensions and challenges, emphasizing the importance of defining values early to align with stakeholders' needs.

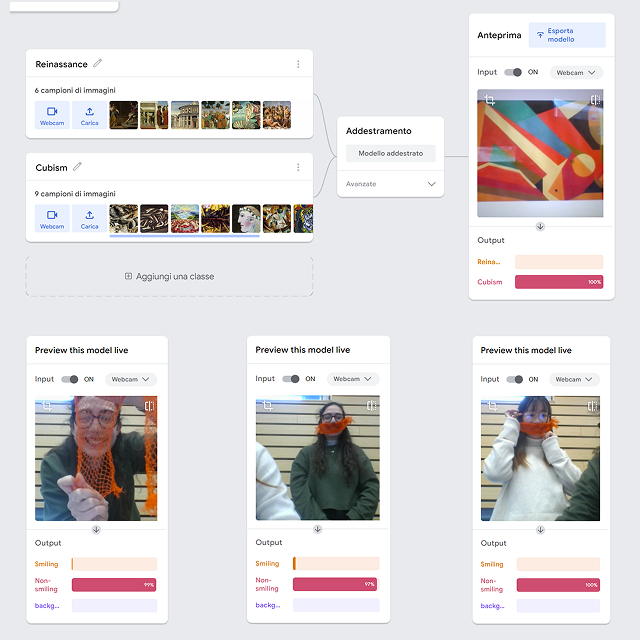

Hacking AI systems highlighted that identifying vulnerabilities and eliminating weaknesses is crucial to improving AI's reliability across applications.

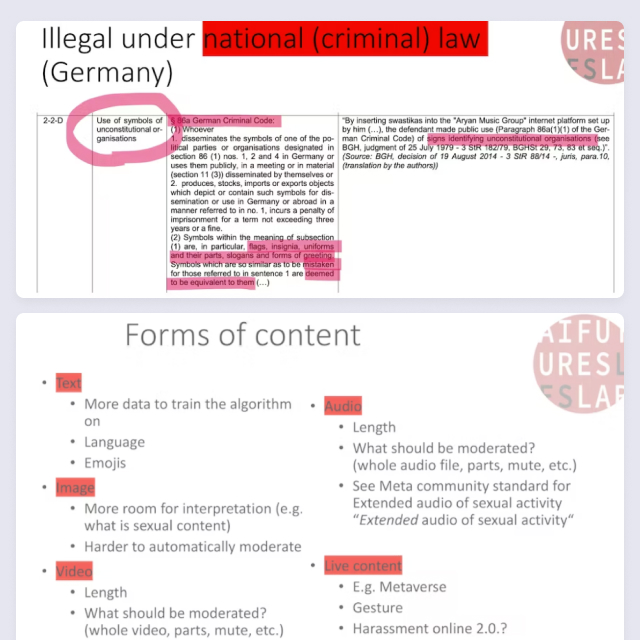

Content coding and moderation explored AI's role in managing privacy and legality, showing its potential in categorizing relevant content and supporting moderation in workplace settings.

Using philosophy to analyze AI ethics focused on moral intuitions and values, providing insights into human rights and bias recognition in the workplace, promoting deeper ethical reflection.

AI for self-reflection and bias analysis demonstrated that AI's recognition is influenced by programming and can be used to address bias through self-labeled datasets.

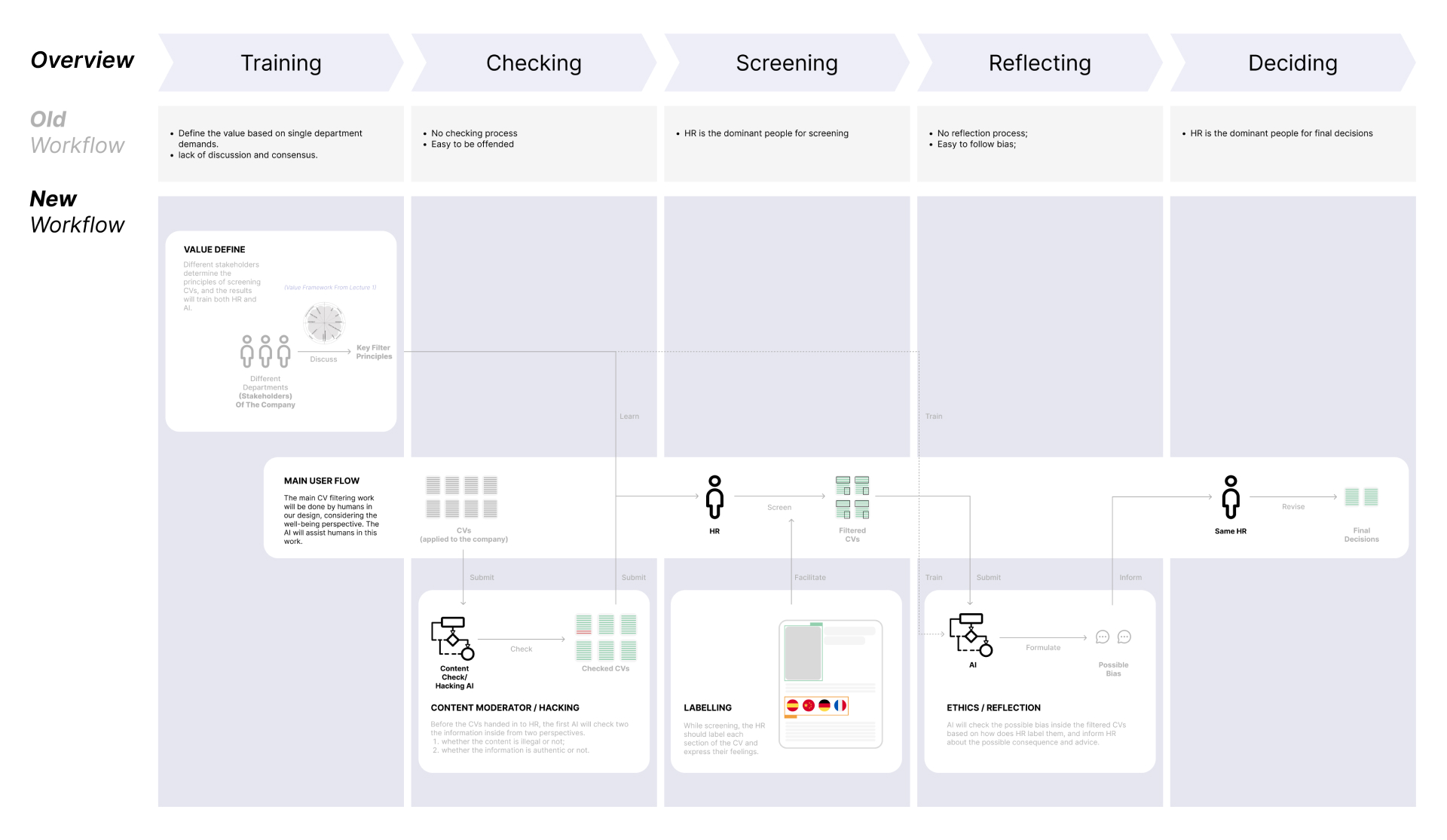

The system involves five phases, integrating AI at two points: training HR and AI with shared values, and using AI for content and authenticity checks, while ensuring human oversight and collaboration throughout the process to improve HR efficiency, reduce bias, and create a more equitable job market.